Getting started with local Airflow development

- Prerequisites

- Quickstart

- Code Review

In this series, I will show you how to leverage Apache Airflow to build robust, production-ready workflows for your SaaS, LLM-powered apps, data processes, and anything else you can dream up.

While there are excellent resources covering specific Airflow components like the official tutorials and Marc Lamberti's awesome guides, my series will focus on how to work with Airflow locally, in production, and with LLMs. I'll also share best practices and patterns I've seen work first-hand.

Skip to Part 2: Running Airflow Locally →Working with Airflow forces you through a paradigm shift in how you think about your backend. It allows you to provide your customers with more value than you can with just a traditional request-response API. I hope to leave you wondering how you ever built without it once you're finished with this series.

Let's go over some terminology first.

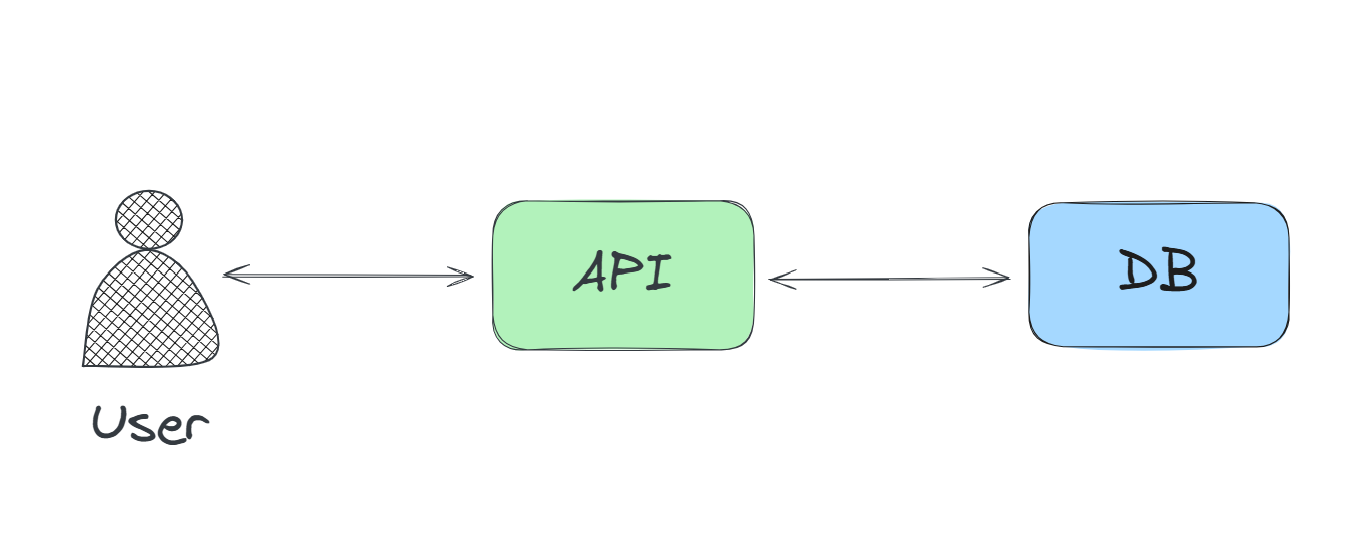

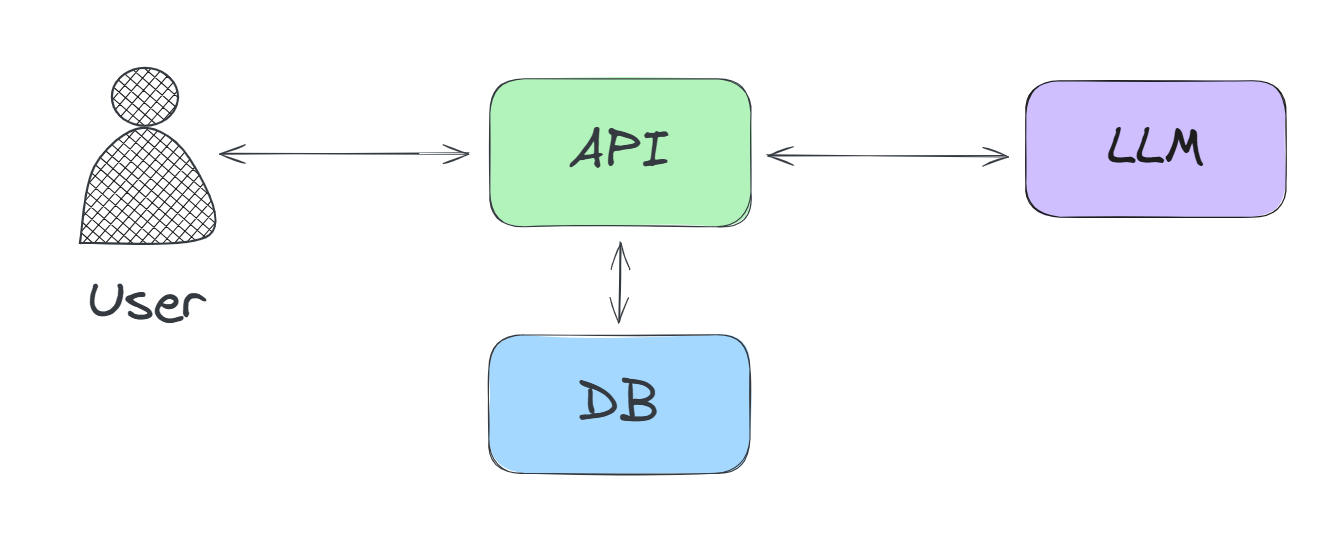

You're likely familiar with the distinction between frontend and backend. The frontend (web client) makes a synchronous request to the backend (server) when the user needs something.

Simple enough. You've launched your sleek new SaaS, you have users rolling in, things are going well.

What happens when you need to handle operations that don't fit the request-response model? What does that even look like and why do you need anything more than a simple API?

Let's go through an example scenario to illustrate the need for a workflow orchestration tool.

Imagine your TODO app has reached 100 monthly active users.

Now you want to add AI-powered task prioritization as a premium feature.

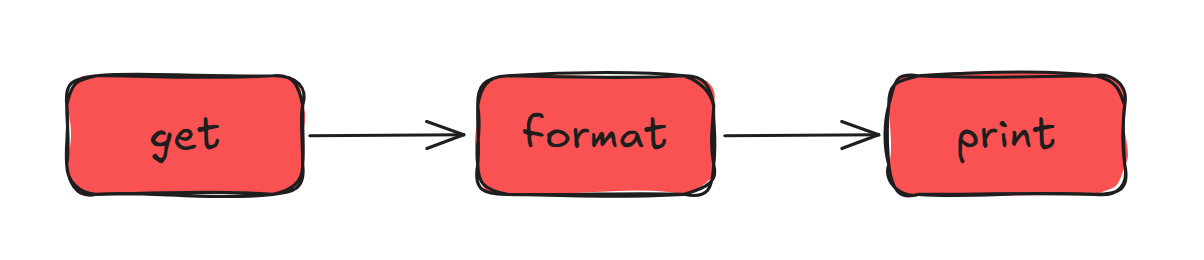

You write a script to list all of a user's TODOs and the LLM ranks them by priority.

Here are three possible approaches to integrating this:

You integrate the script into your API server. You add a CTA (call to action) on the frontend that calls the API to process the TODO list. It works for small lists and for the first few users, but leads to timeout issues with large TODO lists and rate limiting from your LLM provider.

Users with many tasks—your power users—start dropping off due to the poor experience.

Absent a workflow orchestration tool, your platform's ability to create value is limited by the maximum amount of time a synchronous request can take before users get frustrated and leave.

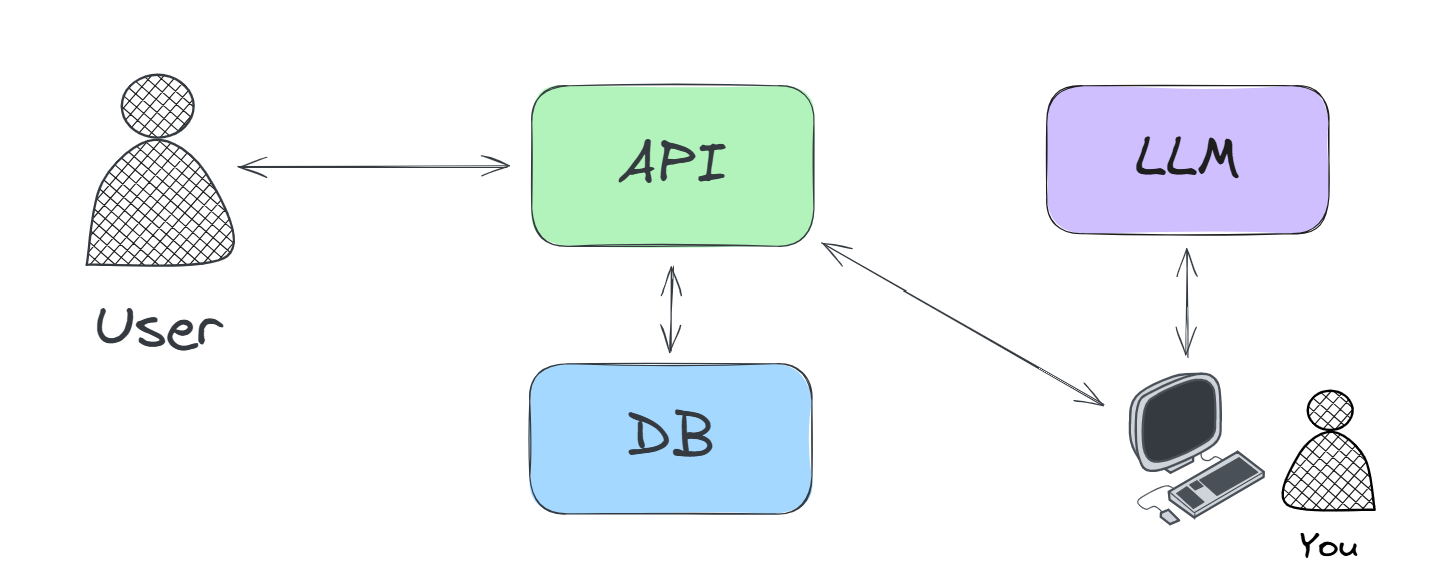

You run the script manually to process all users' TODO lists. This works to generate initial hype, but becomes unsustainable. You have better things to do, and new items aren't automatically processed. Lack of auditability will be a problem if you want to involve other developers in your project.

Option 3: Asynchronous Workflow Approach

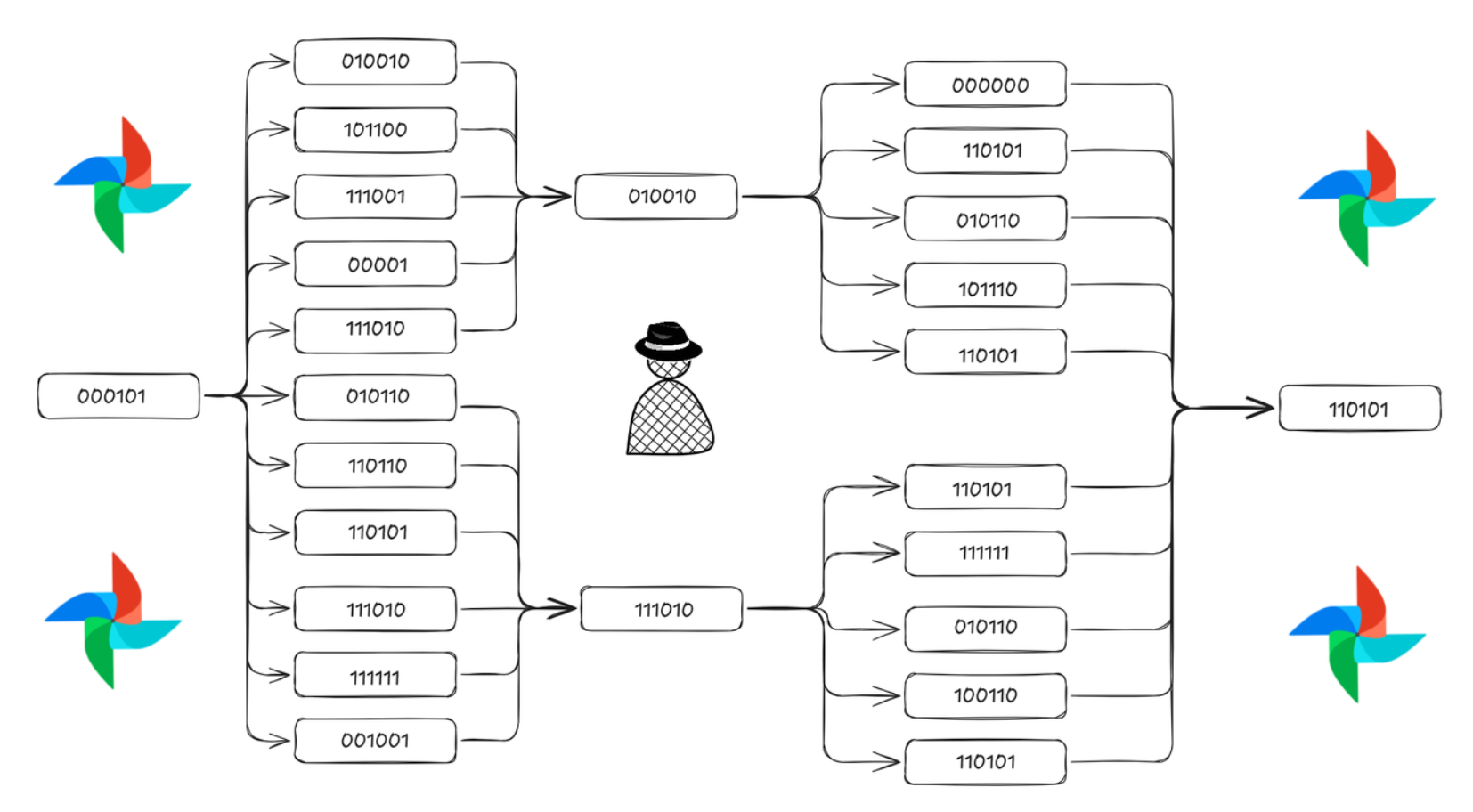

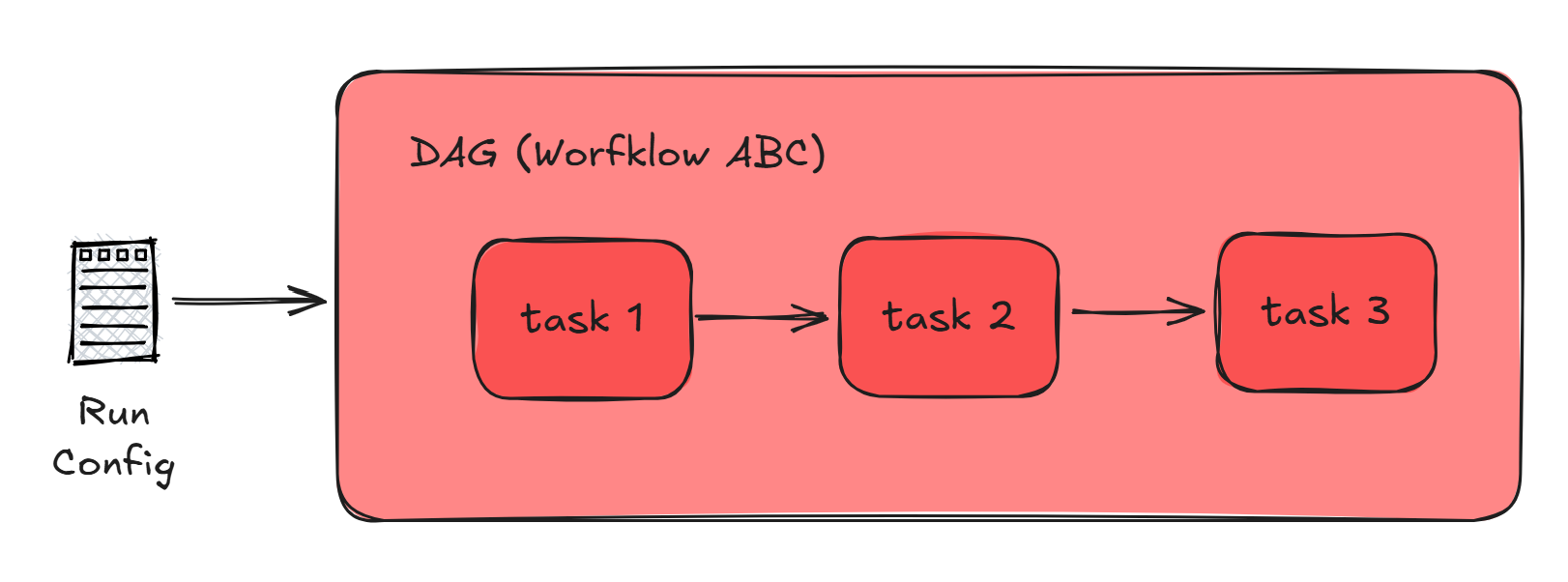

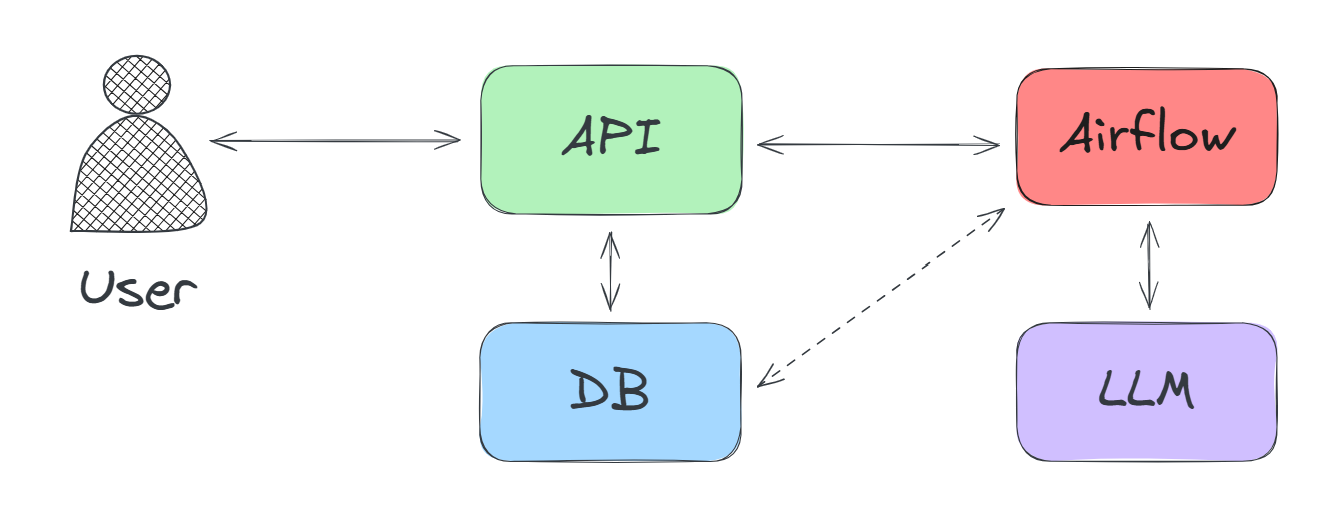

Option 3: Asynchronous Workflow ApproachYou write an Airflow DAG that neatly separates the workflow, is triggered by the API, provides real-time progress updates, and includes a way to notify users when results are ready. By tuning Airflow, its built-in queueing mechanism ensures that the workflow is processed in batches to avoid rate limits.

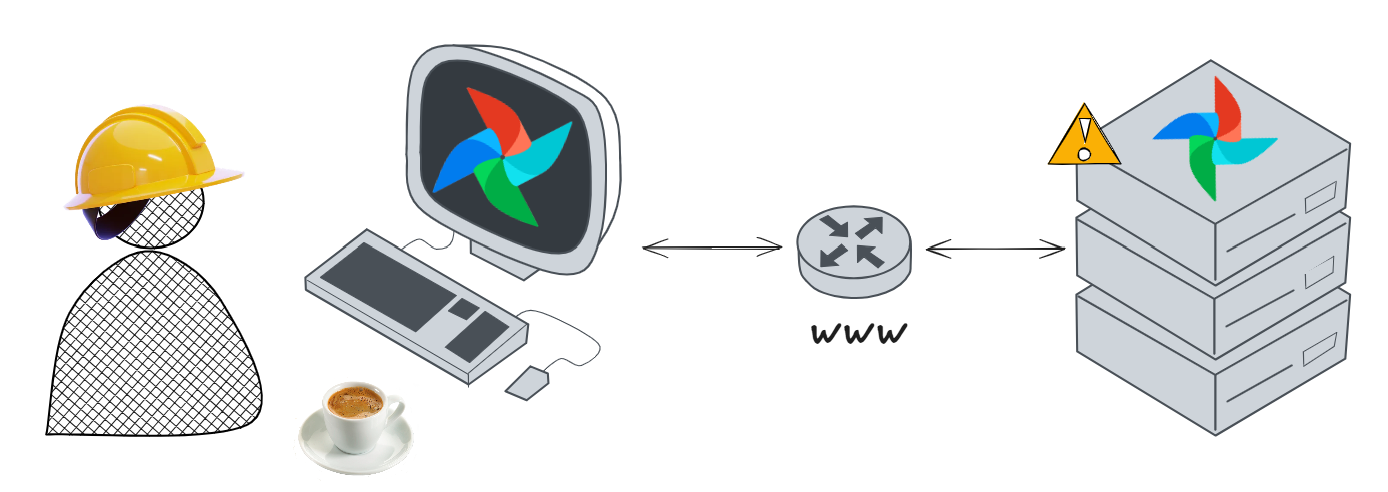

When integrated into your backend, your Airflow deployment will typically receive DAG invocation requests from your API server or, infrequently, by you using the Airflow web UI.

In most cases, your Airflow tasks should modify platform resources via requests to your backend API, but in some cases it may be justified that tasks read from and, less frequently, write directly to your database.

While Airflow is powerful, it's not suitable for every use case. Avoid using Airflow when:

Rule of thumb: if your workflow is guaranteed to complete in under 3 seconds, use the traditional request-response pattern. Otherwise, consider whether writing the workflow as an Airflow DAG is a good fit.

You can and likely will run Airflow in at least two different ways if you decide to use it.

Ideal for when you're just getting started, experimenting with new Airflow concepts, building new workflows, or modifying existing workflows. Using local Airflow to write to production systems for one-off workflows may be tempting, but I discourage you from doing it especially if you've already launched and have users.

Recommended for production purposes that require integration with the rest of your backend, for workflows that need to run on a schedule, or in response to any number of events within your platform. You'll want your CI/CD managing roll out of changes to your Airflow cloud deployment.

The subcategories of cloud-based deployment are self-managed and managed deployments.

Self-managed will be the focus of this series.

| Type | Cost | Maintenance | Insight |

|---|---|---|---|

| Self-managed | 💸 | 🔧🔧🔧 | 🎓🎓🎓 |

| Managed | 💰💰💰 | 🔧 | 🎓 |

Most cost-effective for small/mid-scale production use cases. You'll need to manage Airflow updates and DB migrations yourself if you want to use the latest and greatest version of Airflow. One of the upsides is that you'll learn more about Airflow and workflow orchestration along the way.

Popular options include AWS MWAA and Astronomer. You'll end up paying more out of pocket because updates and DB upgrades are managed for you. The advantage is that you'll get to focus on writing your DAGs instead of managing the infrastructure.

Enough chit chat. Let's get to the good stuff.

Getting started with local Airflow development

Running, fixing, and designing DAGs

Integrating LLMs into your workflows

Moving from localhost to a basic production deployment

Building complex LLM workflows